Google's voice-to-text conversion app will change the communication of more than 400 million people

- 949

- 98

Google's voice-to-text conversion app will change the communication of more than 400 million people

- By huawei-accessories.com

- 29/05/2022

Google, which has been adhering to voice conversion since 10 years ago, launched Live Transcribe (beta version), a voice-to-text conversion application for the deaf-mute, in February 2019, and its technical seminar was held in Japan on March 28th. There are a lot of voice conversion technologies in the world, what is new and what purpose this Live Transcribe is developed for. Turn the back around.

Convert 70 languages and dialects into characters in real time

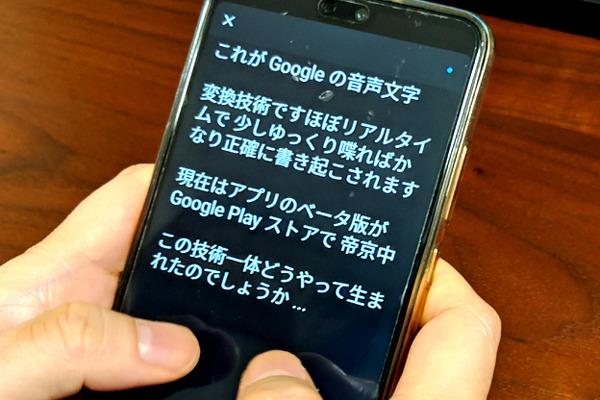

Live Transcribe is currently a beta application released on Google Play, running above Android 5.0 (Lollipop).

The app can convert the text of the voice picked up from the smartphone microphone in real time. The same voice input and conversion is different from Google voice input, spoken language is almost picked up and gradually converted in one character unit. Real-time is the key. The corresponding languages and dialects are more than 70, corresponding to a wide range of languages, which is also a key point.

In particular, switches and so on do not exist, generally speaking only to the mobile phone, gradually changing into words. In addition, because the context can be correctly grasped and transformed, it rarely becomes an incredible transformation with no meaning. Even after the transformation appears to be complete, you can check the context and change the conversion results.

The structure will be explained in more detail later, but since the transformation is basically carried out on the cloud, an Internet connection is necessary when in use. Even if the speed of the line is not so fast, the conversion to a crisp look is very small, please be sure to install it once and confirm it.

Support the hearing impaired through the power of AI

The goal of the app was to create communication tools for people whose hearing was adversely affected, said Sag Sabra, the company's senior product manager, who took the stage for technical notes.

Mr. Sabra estimates that as a result of illness, accidents and age, the number of people around the world who have access to hearing is about 3.7 times that of the Japanese population, about 400, 66 million. If you think of these people as a country, it will become the third largest population in the world after China and India.

With so many hand-held cards in reality, taking into account factors such as population growth, the number is expected to reach 900 million by 2055. Therefore, using technologies such as AI to support the communication of these people will be a magnificent cause equivalent to saving a country.

He also said that Mr. Sabra had an idea that such technology would work not for developed countries, but for developing countries. Because of this, development is not only the latest terminal, but even slightly older, low-performance terminals can work briskly. It can be said to be a very ambitious application.

How do I convert voice to characters?

Now, let's look at the Live Transcribe transformation from a technical point of view.

In fact, in this application, voice-to-text conversion is taking place both locally and in the cloud. Specifically, first of all, the neural network is used on the terminal side to classify 570 types of voice data. From the sound of talking and laughing to the barking of dogs, the crying of babies, the shattering of plates. A situation like this.

The sound marked in this manner is sent to the cloud and applied to an automatic speech recognition system (ARS) based on RNN (recurrent neural network). RNN is an algorithm which is considered to have high effect in natural language processing.

In ARS, the transmitted data is processed by language processing through three stages: original sound model, pronunciation model and language model. In this way, the audio file is sent to the terminal by text and displayed on the display of the terminal. In the development of language models, Washington, D.C., is a specialized university for the deaf-mute. The collaboration of the University of Gallodet.

In fact, the background processing itself uses the same technology as Google voice input.

However, Google voice input is designed to convert short sentences correctly, while Live Transcribe is designed to process long sentences such as conversations as quickly as possible.

In fact, in Live Transcribe, although it varies according to the type of network, it is said to respond in about 200ms (= 0.2s). The data exchanged is about 150 to 250 megabytes in about 30 minutes of conversation, which is not as large as expected. Even in incomplete developing countries such as high-speed LTE lines, it can work fully.

Offline conversion, recording and translation functions are also worth looking forward to.

Live Transribe has a very strong and high-speed voice-to-text conversion ability, but people with hearing impairment needless to say, for people like the author who have more opportunities for interviews, they are also partners with strong writing skills.

Unfortunately, at present, Live Transcribe does not have the ability to save the conversion results as a text file, nor does it have the ability to record voice. In the final analysis, it is just a change on the spot.

On this point, there are also many media-related personnel who regret it, and many questions were received at the seminar, which should be said to be hope, but according to Mr. Sabra, if you switch while recording, it may bring psychological burden to the other party. There is nothing to add to the intentional recording function (the same is true of text documentation). However, I also want to have such a function in the future, so the author has great expectations for it.

In addition, in future versions, deep neural network processing only in offline terminals without the use of clouds will also be included in the field of vision.

That is to say, the Google Pixel series uses the same offline conversion function as the combination of keyboard software "Gboard" for mobile devices, which is compressed into an ultra-small recognition engine. Although it is only in English, it has been tested and released for the Pixel series, and we are looking forward to it.

In addition, we also hope to improve people's correct understanding in the environment of talking at the same time (commonly known as "cocktail party problem") and the recognition rate in noisy environment. The future AI may be like Prince Shengde, hearing the languages of 10 people at the same time and transforming them into separate ones.

With age, hearing decline sometimes develops gradually, which is difficult to realize. When noticed, there are also many levels of obstacles in daily life. Now, even if there are no obstacles, there is a good chance that one day it will befall you. It is very encouraging to develop and provide solutions to such problems with smartphones and the latest technology.

In the future, such a function will become the standard for mobile devices, not only for conversion, but also for joint translation and speech synthesis, and is expected to become a world that can communicate freely with anyone in the world.

![[VLOG interlocking report] Try the combination of Manfrotto's Nitro Tech 608 and iFootage's TC6 [VLOG interlocking report] Try the combination of Manfrotto's Nitro Tech 608 and iFootage's TC6](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/25/278166fcc7181b73dd99af1b87fd6482_0.jpeg)